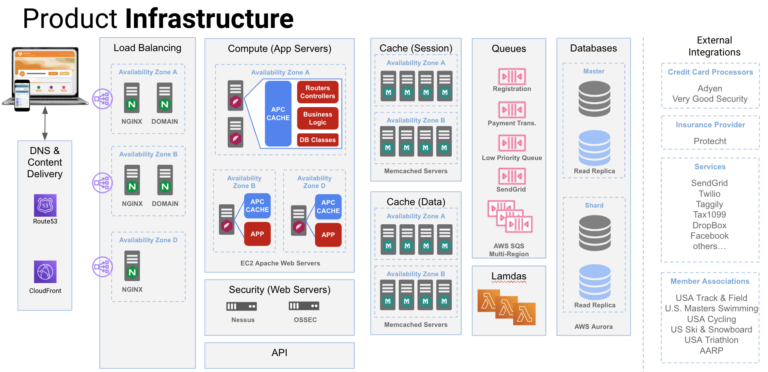

We will combine our yearly Infrastructure report along with the Thanksgiving recap since it is by far the busiest day for our infrastructure.

We Served Our Customers Well on Thanksgiving

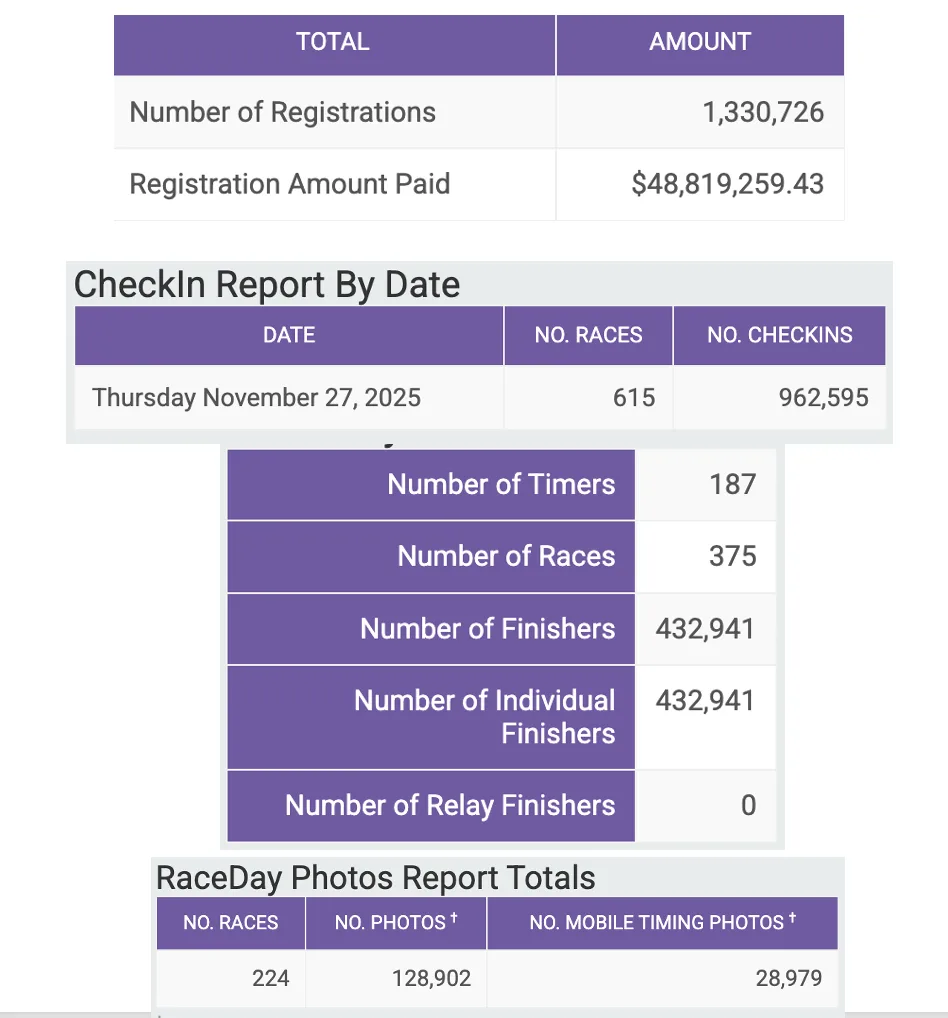

Some impressive stats on the volumes we did on Thanksgiving. 1.3 Million people registered for a Turkey Trot on RunSignup this year, producing nearly $50 Million of registrations. This came from over 1,000 races, with 389 of those races having over 1,000 registrations. 615 races used our Checkin App to record 962,595 participants. Our race day tools were used in 375 races to record the times of 432,941 finishers. 224 races uploaded 128,902 photos, and our new Mobile Timing app uploaded over 28,000. And almost 600,000 result notifications were sent. All for free.

Our open platform also had hundreds of thousands of API requests from a variety of scoring software including Agee Timing, RunScore, RMTiming and others. We also supported 73 races with RaceResult Scoring participant sync.

We also had our first year of AI Chatbot helping events with customer service. The largest user of the Chatbot was the 130th Annual YMCA Buffalo Turkey Trot. Yes, the oldest race in America made use of the most advanced technology thanks to the involvement of Leone Timing. They had a total of 1,284 chats with 1,999 messages in the past month for their race of 15,000. Overall we had 2,300 chats with 3,700 messages in the past 7 days. We are just at the beginning of using AI technology and can’t wait to see how these numbers grow next year. We are guessing it will be over 50,000 + chats the week before Thanksgiving!

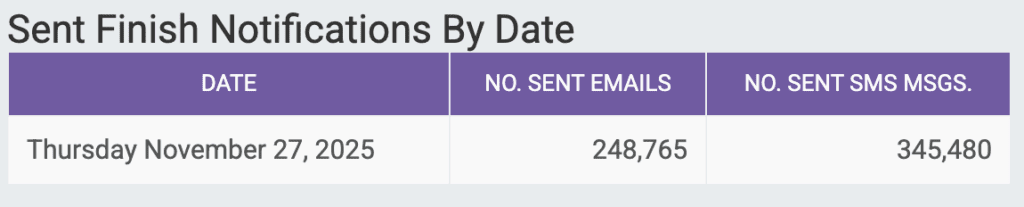

57,800 Requests per Minute Peak

We hit a peak of nearly 1,000 requests per second around 10AM Eastern.

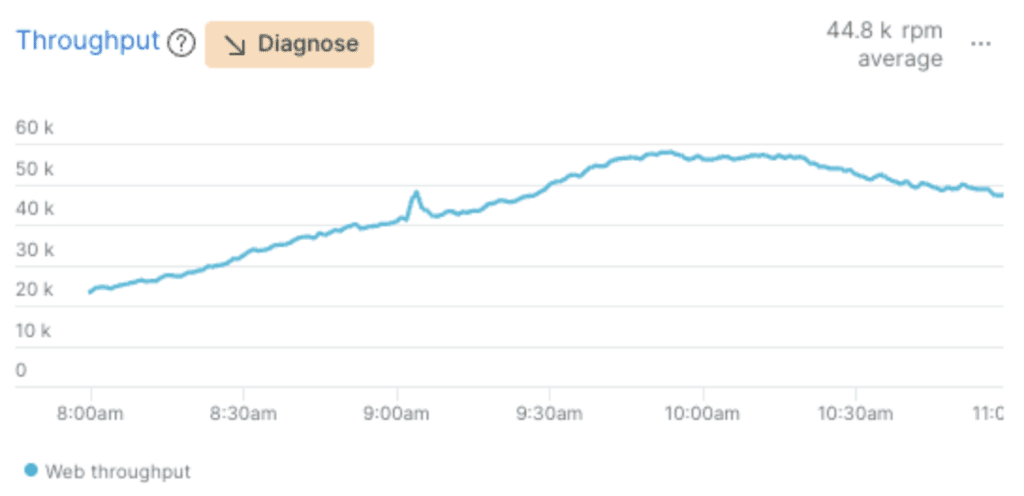

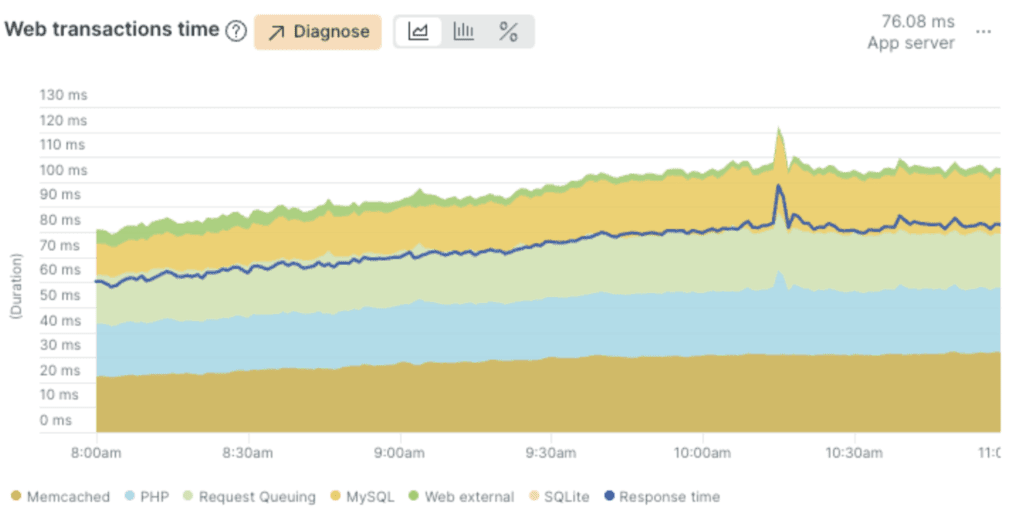

100% Uptime with SubSecond Performance

Our infrastructure stayed FAST. We stayed below 0.11 across all requests, including reports.

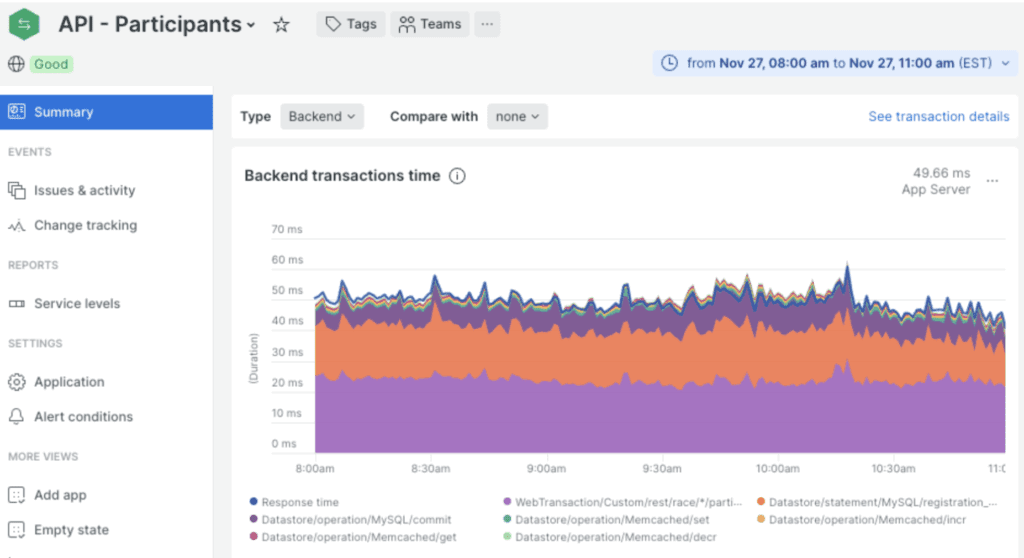

API Response Time of 0.045 seconds

One of the key parts of our infrastructure is our API. We had upgraded some of that infrastructure this year as we prepare for wider use of AI technology using our API as a foundational element, and it performed very well. Our RaceDay Scoring and RaceDay Checkin apps use the participant API, as do all of the third party software accessing and updating participant data. The average was a super fast 45 milliseconds.

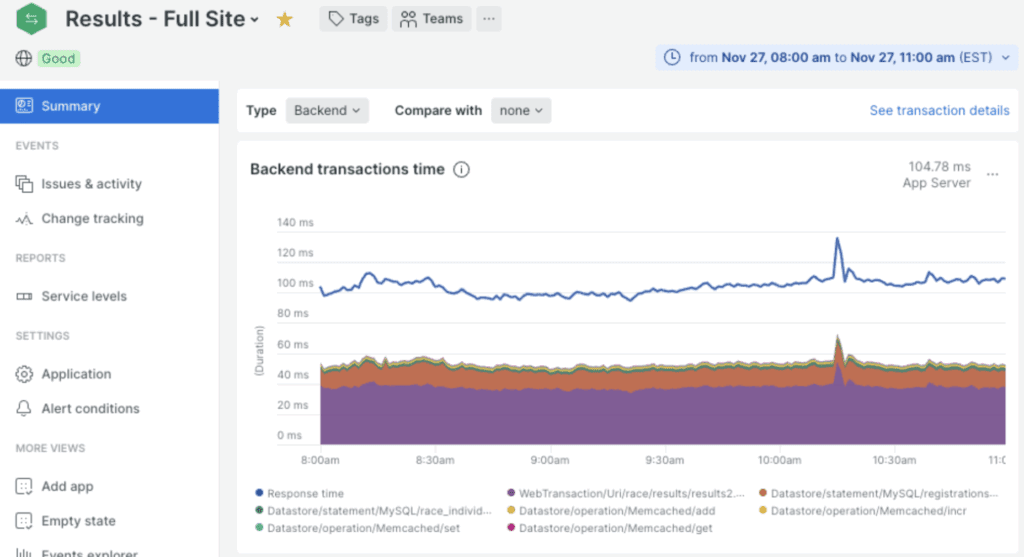

Results Averaged 0.1 Seconds

Results pages were searched millions of times on Thanksgiving (in addition to the 600,000 result notifications we sent!). And performance was great.

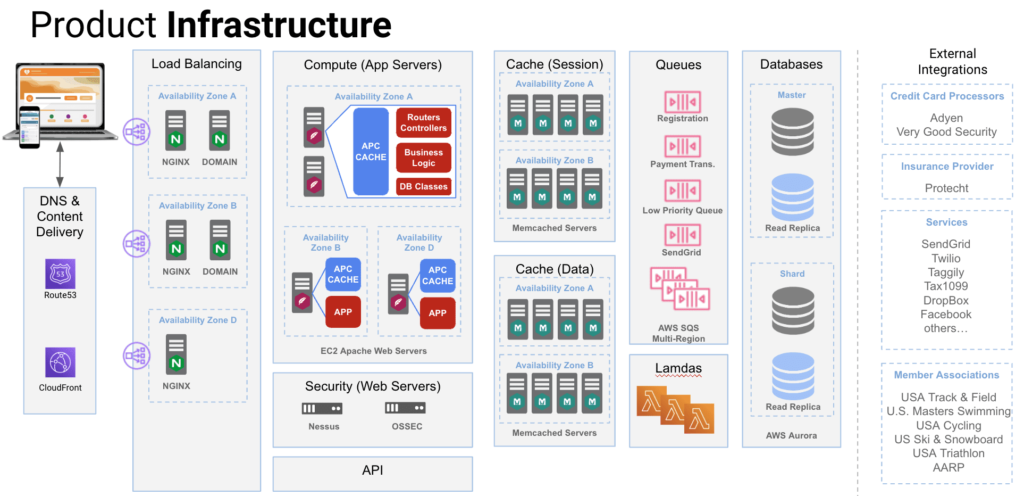

2025 Experiment – Over Provision (4-6X) Our Normal Infrastructure

Thanksgiving is a day where we can experiment (with low risk) with how our infrastructure works under heavy load. Last year we basically left it like it is any day of the year. We saw stresses at the NGINX level and had to add a server during the morning last year (note, we learned from that and made some changes with how we configure NGINX). We also saw that our database Read Replica was highly utilized, and we have since added another read replica to our architecture. All other levels of our multi-tier architecture were no where near maximum.

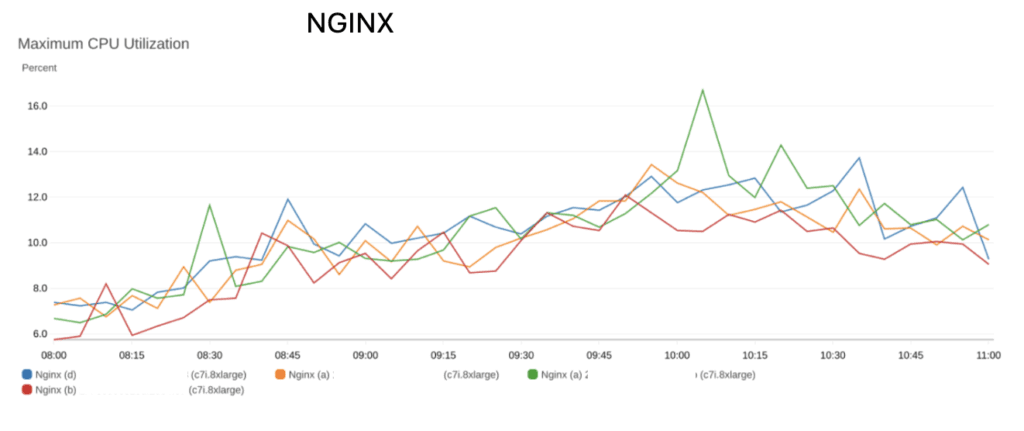

This year we wanted to see what would happen if we added a bunch of capacity. We changed our NGINX servers from 4 servers running on c7i.2xlarge servers to c7i.8xlarge – effectively quadrupling their size. The result was what we anticipated – with very low utilization of the servers even at peak (one server hit 16% max).

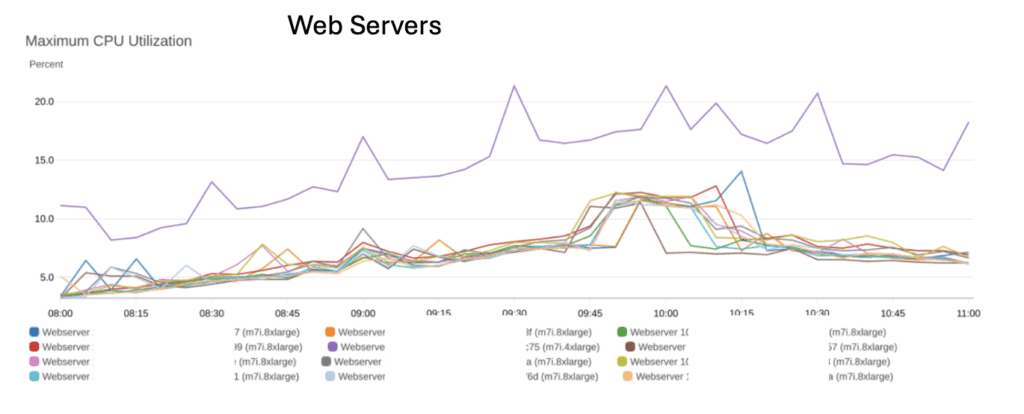

For the Web Server tier, we also went from 2XLarge to 8XLarge. We also went from 8 to 12 webservers. We left a webserver we use for a bunch of administrative things (no worries – it fails over automatically) at 4Xlarge. That was our busiest web server. The webservers taking the load hit a max of maybe 10%.

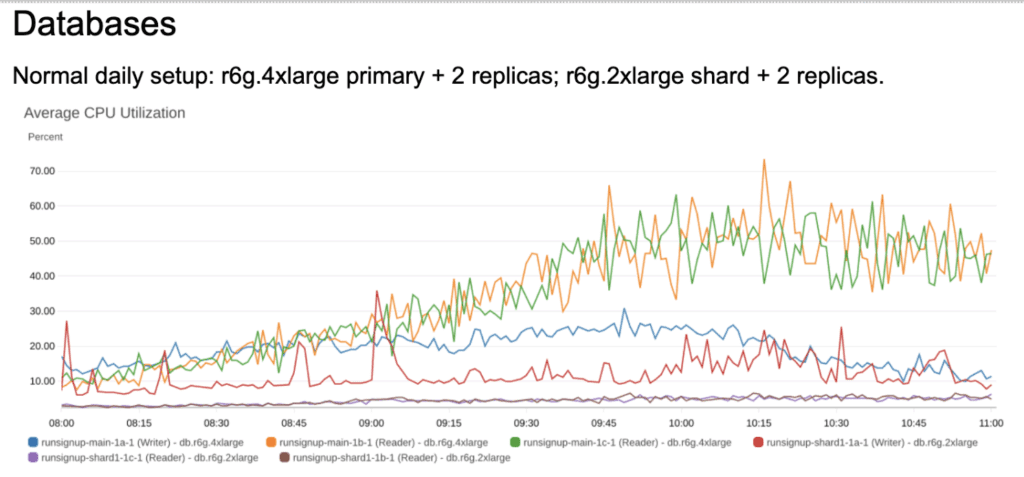

The Read Replicas were the only part of the infrastructure that broke a sweat. Most of the results lookups and webpage content that is not cached happen on the Read Replicas. When they get busy, response time can slow down, but as we saw in the above graphs, there were no real slowdowns at that level. So we will add more next year as well as upgrade and increase the size of the servers.

What We Learned in 2025

We learned several valuable things. Mostly, that our infrastructure can easily be scaled. We spent about a half day on this setup (we do have auto-scaling but decided not to test that since we did not make our team worry on a Thanksgiving!). The good news is that the couple of levers we have to handle future growth work well.

- We can add a large number of servers and expand linearly.

- We can increase the size of servers and grow linearly. We normally run on 2XLarge, and can expand to 48XLarge.

- Our writer databases (we have a primary and a shard database today) can also scale with larger hardware and add 13 more read replicas.

Our estimate is that we could easily handle 10X the load we had yesterday – about 10,000 requests per second – with our current infrastructure architecture and a couple of days of work. This bodes well for supporting our continued growth over the next 20 years – and we would expect more changes coming before then.

2025 Summary

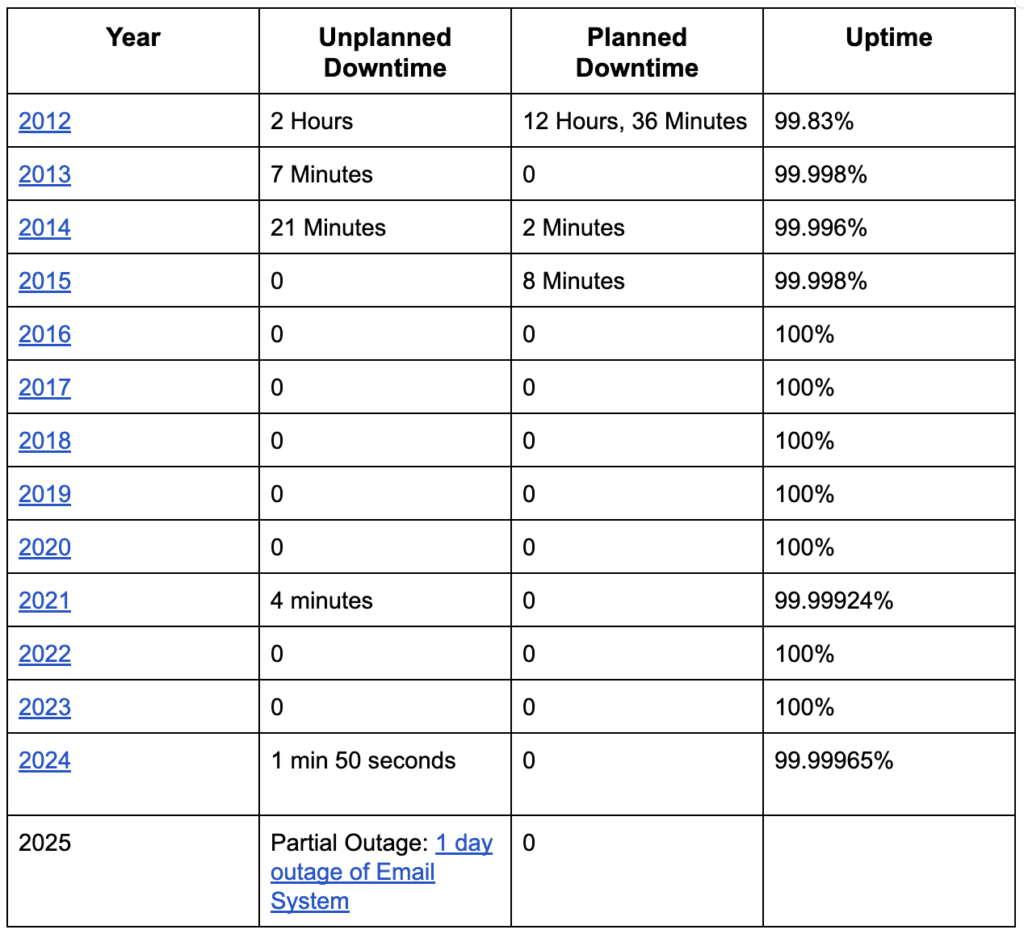

The quick recap for the year, is that we had zero downtime again this year. That brings a total of 6 minutes of downtime since 2015. We stayed up when many websites had problems in Oct. 20 when Amazon AWS went out. We did have two issues. First we had a day and a half outage over a weekend for email delivery that was caused by an emergency set of upgrades we had to make for security and deliverability purposes. A less important outage was on that Oct. 20 AWS issue where our Analytics systems reported twice for some transactions. It is important to note that much like Google Analytics, our analytics system is not meant to be transactional and highly reliable like our main production server. Here is a summary chart of the past 14 years:

We did a number of nice upgrades to our infrastructure:

- Major upgrade of our payment processing, payments and KYC onboarding of new customers.

- Upgraded NGINX configurations.

- Added a Read Replica to our database tier.

- Upgraded our API infrastructure and added MCP server capabilities in preparation for growth of AI load.

- Upgraded EMail infrastructure to handle the 1 Billion + email messages that we expect in 2026.

Summary

We continue to invest in our infrastructure to ensure we are a reliable, stable technology platform for our customers. We appreciate your trust in our business.